Accessing a Private GKE Cluster Using Bastion Host and Service Account Impersonation

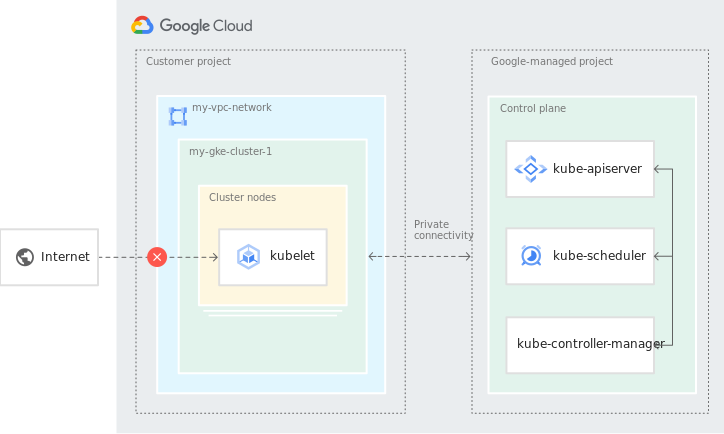

Accessing a private cluster while impersonating a service account was a bit more challenging than expected. This blogpost describes the challenges and solutions with connecting kubectl from your local computer to a private GKE cluster. The GKE cluster is configured with master auth networks, and general access to the master node needs to be performed using a ssh tunnel through a bastion host.

As it turns out, Google has a good description of doing just that: https://cloud.google.com/kubernetes-engine/docs/tutorials/private-cluster-bastion. I followed this recipe, the cluster and bastion host was already created for me. At first this seemed to be working fine. But soon I discovered that I was not using the Service Account impersonation. Also I had issues with creating and renewing the auth token.

Below I will demonstrate the issues I encountered and how they were resolved.

Since the GKE cluster and bastion host was configured. I have access to the bastion host using IAP and my personal user account. So I created the ssh tunnel through the bastion host:

$ gcloud compute ssh bastion-host - tunnel-through-iap - project=gke - zone=europe-west1-b - -4 -L8888:localhost:8888 -N -q -f

Continued to follow Google’s recipe, but added the — impersonate-service-account flag to the “gcloud container clusters get-credentials“ command.

“gcloud container clusters get-credentials” creates a kubeconfig with all the details kubectl needs to access the cluster. See https://cloud.google.com/sdk/gcloud/reference/container/clusters/get-credentials.

$ gcloud container clusters get-credentials - internal-ip gke-west1 - region europe-west1 - project gke - impersonate-service-account xxxxx@xxxxxx.iam.gserviceaccount.com

$ export HTTPS_PROXY=localhost:8888

$ kubectl get ns

NAME STATUS AGE

bookinfo Active 41d

default Active 46d

gmp-public Active 46d

gmp-system Active 46d

istio-system Active 42d

kube-node-lease Active 46d

kube-public Active 46d

kube-system Active 46d

So far everything was fine, “kubectl get ns” worked as expected. But when I tried to create a new namespace I got this error:

$ kubectl create namespace test

Error from server (Forbidden): namespaces is forbidden: User "user@domain.com" cannot create resource "namespaces" in API group "" at the cluster scope: requires one of ["container.namespaces.create"] permission(s).

It turns out kubectl was accessing the cluster using my personal user account, user@domain.com, which does not have access to create a new namespace.

After some troubleshooting I found this blog: https://binx.io/2021/10/07/configure-impersonated-gke-cluster-access-for-kubectl/.

Kubectl uses the command “gcloud config config-helper –format=json”, defined in the kubeconfig file. And for some reason, when it tries to refresh the authentication token, it does not include the — impersonate-service-account flag used originally. As described in the blog, I created a separate gcloud configuration to use with Service Account impersonation. And within that configuration executed “gcloud config set auth/impersonate_service_account”, making gcloud impersonate all commands as the Service Account.

$ gcloud config configurations create impersonated

$ gcloud config set auth/impersonate_service_account xxxxx@xxxxxx.iam.gserviceaccount.com

The get-credential command failed when executing it with the Service Account credentials.

$ gcloud container clusters get-credentials - internal-ip gke-west1 - region europe-west1 - project gke

WARNING: This command is using service account impersonation. All API calls will be executed as [xxxxx@xxxxxx.iam.gserviceaccount.com].

ERROR: (gcloud.container.clusters.get-credentials) There was a problem refreshing your current auth tokens: Proxy URL had no scheme, should start with http:// or https://

gcloud is also using the env variable HTTPS_PROXY. When this variable is unset, the get-credentials command worked fine.

$ unset HTTPS_PROXY

$ gcloud container clusters get-credentials - internal-ip gke-west1 - region europe-west1 - project gke

WARNING: This command is using service account impersonation. All API calls will be executed as [xxxxx@xxxxxx.iam.gserviceaccount.com].

WARNING: This command is using service account impersonation. All API calls will be executed as [xxxxx@xxxxxx.iam.gserviceaccount.com].

Fetching cluster endpoint and auth data.

kubeconfig entry generated for gke-west1.

But then kubectl failed, it needs to proxy through the tunnel to access the GKE cluster API.

$ kubectl get ns

Unable to connect to the server: dial tcp 172.16.0.2:443: i/o timeout

If the HTTPS_PROXY is read, then the kubectl command will work until the next auth token refresh. A permanent way to resolve this is to set the proxy config for each individual cluster in the kubeconfig file located at $HOME/.kube/config.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: *redacted*

proxy-url: http://localhost:8888

server: https://172.16.0.2

name: gke-west1

Now kubectl works as expected

$ kubectl create namespace test

namespace/test created

Summary

After a lot of debugging it turns out that Google’s recipe works fine when using the same user credentials to access both bastion host and GKE cluster and when both kubectl and gcloud can be proxied through the bastion host. But in this case I had to access the GKE cluster using Service Account impersonation. And the gcloud command was not working when proxied through the bastion host using the Service Account credentials.

The fact that the kubectl command also executes a gcloud command, made the troubleshooting harder. For example, if I by mistake execute kubectl without the HTTPS_PROXY variable set, then the token was successfully renewed behind the scenes. But the kubectl command still failed because of the missing proxy config. So I added the HTTPS_PROXY and everything seemed to be working fine for a period. And when it was time to renew the token, again the command failed. This apparently random failure of the kubectl command made me suspect that the bastion host connection was unstable.

Below are the issues I encountered when accessing a private GKE cluster using Service Account impersonation through ssh proxy. And the actions needed to resolve them.

Kubectl was executing commands as the user logged in, not the Service Account it was supposed to impersonate:

- Created two configurations in gcloud. One for managing the ssh tunnel and one for executing commands impersonated as a Service Account.

- Impersonate the Service Account at configuration level, not by adding the flag at each command.

gcloud and kubectl use the same environment variable HTTPS_PROXY but need different config:

- Added the proxy setting for the cluster in $HOME/.kube/config not in the HTTPS_PROXY environment variable.

Accessing a Private GKE Cluster Using Bastion Host and Service Account Impersonation was originally published in Compendium on Medium, where people are continuing the conversation by highlighting and responding to this story.