Google Cloud quotas — with Terraform

Google Cloud quotas — with Terraform

Setting up specific quotas on Google Cloud resources that are utilized by your project can be golden in terms of ensuring that the billing never exceeds your budget. The quotas are easy to set up, but it can be highly beneficial to structure the quotas as infrastructure-as-code, and all the benefits that come with that; such as disaster recovery, security, consistency and faster deployments, to name a few. This blog post will show how these Google Cloud resource quotas can be managed with Terraform.

The official docs from Google on how to manage Google Cloud resource quotas with Terraform can be found here: https://cloud.google.com/service-usage/docs/terraform-integration . I will base the blog post on this foundation, and elaborate on my findings while implementing the quotas with Terraform.

Google Cloud console — quotas view

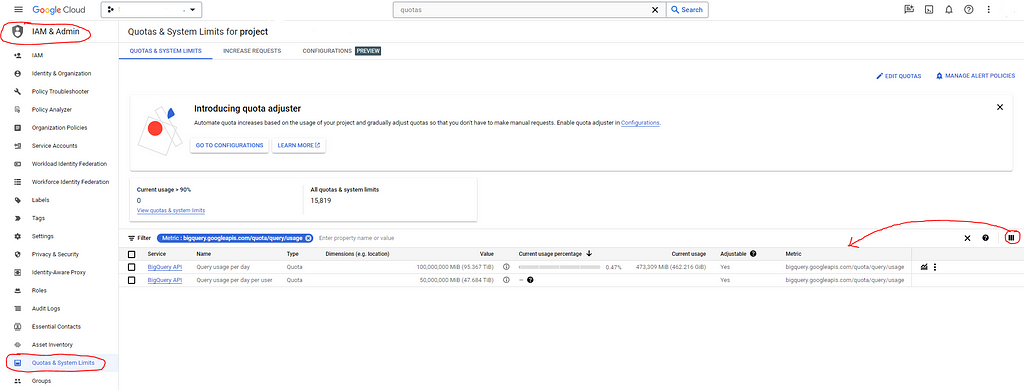

First, if you want to have an overview over all Google Cloud resource quotas in the Google Cloud console, it can be accessed through IAM & Admin → Quotas & System Limits . A tip is to include the “Metric” column in the quota-view. This way, you can see the name of the metric you need to provide when working with Terraform.

Now, in this blog post we will show how we can manage two Google BigQuery quotas with Terraform, namely the allowed query usage on a project-level, and the allowed query usage per user.

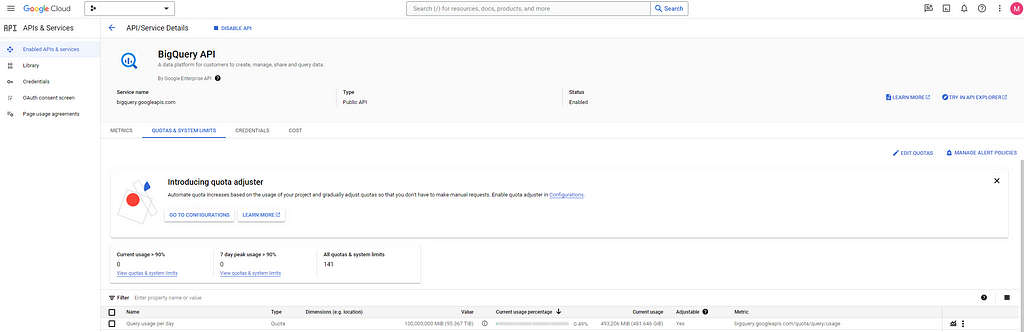

If you want to have a more detailed look into every BigQuery quota, you can do so by navigating to APIs & Services -> BigQuery API -> Quotas & System Limits as shown below:

As you can see, here we have also added the “Metric” column in the view.

Terraform — defining quotas

The code I will present in this section is from my google-cloud-quotas GitHub repository: https://github.com/magnusstuhr/google-cloud-quotas .

The managing of Google Cloud resource quotas with Terraform are currently only available through the “google-beta” Terraform provider. We will use the “google_service_usage_consumer_quota_override resource” for managing these quotas, which is documented in the Terraform provider here: https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/service_usage_consumer_quota_override

First, we need to set up our Terraform “main.tf” file with the required “google-beta” provider as follows:

https://medium.com/media/f42a523cc625cd5247b13aedd6ae1386/href

After this, we can then run “terraform init -upgrade”, which will resolve the dependency to the provider, and create a terraform.lock file.

Now, we can go ahead and define our first Terraform resource, for the BigQuery query usage on the Google Cloud project-level per day:

https://medium.com/media/7f6bd91ca0e72da65e27958c3b32c014/href

Now, as you might have noticed, the “metric” is the metric that we saw in the Google Cloud console by adding the “Metric” column in the quota view.

The “limit” is what Google calls the “limitUnit” in their API responses for the quotas, and is not shown in the Google Cloud console. I have not yet found a proper way of figuring out what the limit should be for each quota override, and have just figured it out from inspecting the network traffic in the Google Cloud console.

The “override_value” field contains the value you want to override the quota with — in this case we set the total project-level query usage for BigQuery to 100 TB of scanned data. Based on the BigQuery billing model this would be approximately $500 in billing.

The “force” field is optional, and has the following explanation from the official Terraform documentation, linked to earlier in this post: “If the new quota would decrease the existing quota by more than 10%, the request is rejected. If force is true, that safety check is ignored.”

Now, we can go ahead an define our next Terraform resource, for the BigQuery query usage on the Google Cloud project-level per day per user:

https://medium.com/media/1f584040a1e7f62e96d6199f215f29dc/href

Now we have both limited the overall project query usage in BigQuery, but also how much of that quota each user is allowed to spend, which in our case is 5 TB.

Potential pitfalls

One specific error I stumbled upon when working with this was the following:

COMMON_QUOTA_CONSUMER_OVERRIDE_ALREADY_EXISTS

“Error 409: Cannot create consumer override. The override specified in the request already exists. Please call UpdateConsumerOverride instead.”

This error typically occurs if the quota is managed elsewhere, either in Terraform or via the Google Cloud console.

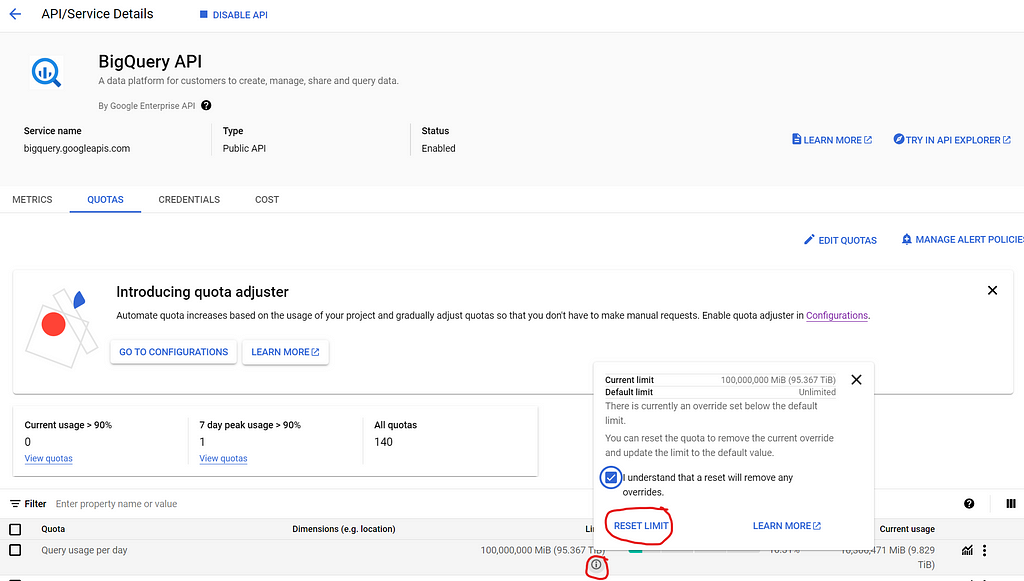

Now, I initially tested out overriding the default quotas directly via the Google Cloudd console, and then got this error when trying to apply the Terraform resources.

In order to fix this problem when it occurs, one would have to reset the quota to let Terraform manage it. Now, you would might think that the quota is reset by just reverting to the default value in the Google Cloud console, but the reset must actually be done through the explicit reset functionality in the Google Cloud console like shown in the image below:

Conclusion

We have looked at how to manage Google Cloud quotas with Terraform, with the example of two different BigQuery query usage quotas, both on the project-level and per user in the project. It is fairly straight-forward and easy to manage such quotas with Terraform, and it should be mandatory to do so with as infrastructure-as-code, with all its benefits.

Google Cloud quotas — with Terraform was originally published in Compendium on Medium, where people are continuing the conversation by highlighting and responding to this story.