How to Sharpen Your Unit Tests

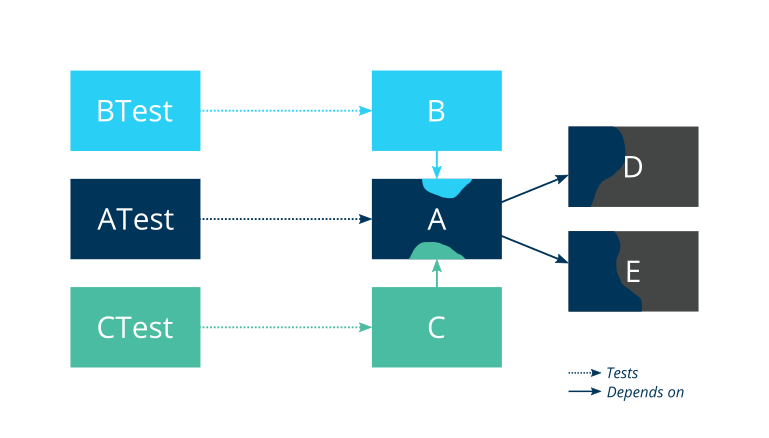

When I’m working on my hobby projects, I try to follow the principles of TDD as good as I can. Writing a failing unit test before I implement a new feature or change an existing feature is one of those principles. I also follow up test coverage to make sure there are no glitches, though I have to say that I don’t care much about line coverage as I do about mutation coverage. Yet what often happens is that my unit tests become a bit “blurred”: unit tests on class A are supposed to reside in a test class ATest, but too often it turns out that some of the functionality in class A is covered by unit tests in test classes BTest and CTest, or that unit tests in ATest only pass through class A and in reality test functionality in classes D or E only.

There are many reasons why you can end up in a situation where unit tests become blurred. One reason may be that some of the functionality in class A is hard to test directly, but easy to test through class B. Another one may be that some functionality requires a bit of set-up through class C. As a result, the unit tests ended up in test classes BTest and CTest respectively, even though they are in reality unit tests on class A.

Here’s another reason: you wrote a unit test in CTest to test some functionality in C, but it happens to also test some functionality in class A as a side-effect. As a consequence, part of class A appeared already covered in the test coverage reports, so you didn’t realize that some of the unit tests on A now reside in test class CTest.

Finally, a very common reason but also a very tricky situation to handle properly without the right tools: you started out with a single class A, but it grew too big, so you extracted two new classes D and E. Luckily, you have good test coverage, so the refactoring of the source code, including the extraction of two classes, isn’t a big risk. Next, you also moved the unit tests that obviously test functionality in classes D and E only to the newly created test classes DTest and ETest. But not all of the unit tests were that clear-cut between classes A, D and/or E, so they remained in ATest, certainly if they continued to use class A’s interface as their main entry to the source code.

Sure, as long as your test coverage is sufficiently high, and your unit tests are close enough to the functionality they’re testing, this isn’t a big problem. And it’s not a problem either that unit tests in test class BTest rely on source code in class A, since class B depends on it. In most cases, that’s just how it needs to be anyway. The problem is when part of the source code in class A is covered by unit tests in test class BTest only. I like it that if I make a mistake in class A, e.g. while refactoring it, at least one of the unit tests in test class ATest will start to fail. There may be unit tests in other test classes that start to fail too, because they rely on functionality in class A, but a bug in class A should always have consequences for at least one unit test in test class ATest.

Figuring out in which class you’ve made a mistake is not a problem if class A is the only one you’ve been working on. However, if you’ve made changes to classes A, B, C and D, it’s nice if you can assume that classes B and D most probably are fine since only test classes ATest and CTest have failing unit tests. Of course, theoretically it’s still possible that you created a sneaky problem in class B that somehow was able to circumvent the unit tests in test class BTest and failed a couple of unit tests in test classes ATest and CTest instead. Or maybe the real problem is that one of the unit tests in test class BTest is incorrect, that’s also a possibility. But that should really be the rare exceptions.

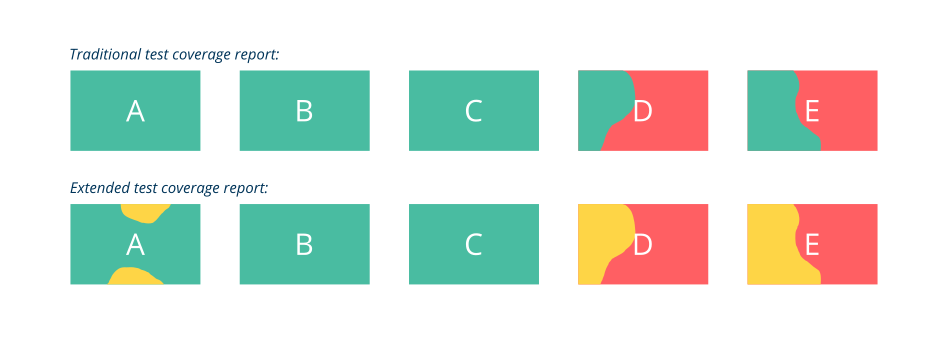

So how can you avoid that your test classes start to blur into neighboring classes? As far as I know, test coverage reporting tools have currently no out-of-the-box support to indicate to which extent class A is covered by the unit tests in test class ATest only. I could wish for an extension showing not only which parts of the source code aren’t covered (red), and which parts are (green), but also which parts are covered by the “right” test classes (green), and which parts by other test classes (amber). Which unit test class is “right” for which source code class could depend upon convention, annotations or whatever works best. My simple rule is to add the suffix “Test” to the source code class name.

But in lack of support for such a feature, one can always have some fun trying to write a couple of scripts achieving the same result. I’ve done that for the mutation testing tool PITEST when used in a Java Maven project on a Linux machine. The result is available in a repository named sharpen_pitest on GitHub. It works great for the little hobby projects that I work on, and gave me some surprises when I ran it against older projects. Most important lesson so far: it’s amazing –and terrifying– how much mess you can have going on in a little hobby project, even when you’re doing your best writing unit tests and checking your test coverage reports frenetically to make sure no source code remains uncovered. Just making sure that all unit tests are in the right test class, and that source code is not just covered by unit tests, but by unit tests in the right test class, also improves the quality of the unit tests. Why? I think it is because writing unit tests for class A in test class ATest also forces you to think of the functionality you want to test in terms of class A’s abstraction level.

How to Sharpen Your Unit Tests was originally published in Compendium on Medium, where people are continuing the conversation by highlighting and responding to this story.